- 36 Posts

- 9 Comments

2·8 months ago

2·8 months agoI say “no opinion on Devin”, like that’s gonna be the big thing this week.

Then Quiet-STaR comes out…

It just sounds like the creator made a thing that wasn’t what people wanted.

It just feels like the question to ask then isn’t “but how do I get them to choose the thing despite it not being what they want?”

“Hard work goes to waste when you make a thing that people don’t want” is … true. But I would say it’s a stretch to call it a “problem”. It’s just an unescapable reality. It’s almost tautological.

Look at houses. You made a village with a diverse bunch of houses. But more than half of those, nobody wants to live in. Then “how do I get people to live in my houses?” “Build houses that people actually want to live in.” Like, you can pay people money to live in your weird houses, sure, I just feel like you have missed the point of being an architect somewhat.

2·9 months ago

2·9 months agoI posted a bunch of Substack versions of Zvi’s stuff here earlier and they didn’t get as many upvotes. So like the pigeons driven crazy by random stimulus in the intermittent reinforcement studies, I have only posted the Wordpress since.

Also they don’t have an annoying popup.

3·9 months ago

3·9 months agoCan you judge if the model is being truthful or untruthful by looking at something like

|states . honesty_control_vector|? Or dynamically chart mood through a conversation?Can you keep a model chill by actively correcting the anger vector coefficient once it exceeds a given threshold?

Can you chart per-layer truthfulness through the layers to see if the model is being glibly vs cleverly dishonest? With glibly = “decides to be dishonest early”, cleverly = “decides to be dishonest late”.

2·1 year ago

2·1 year agoSure, but those systems are known to be useless. Far as I can tell, Zvi’s comment holds up. Falsely believing that an image was AI generated should not count as “AI involvement”, imo.

1·1 year ago

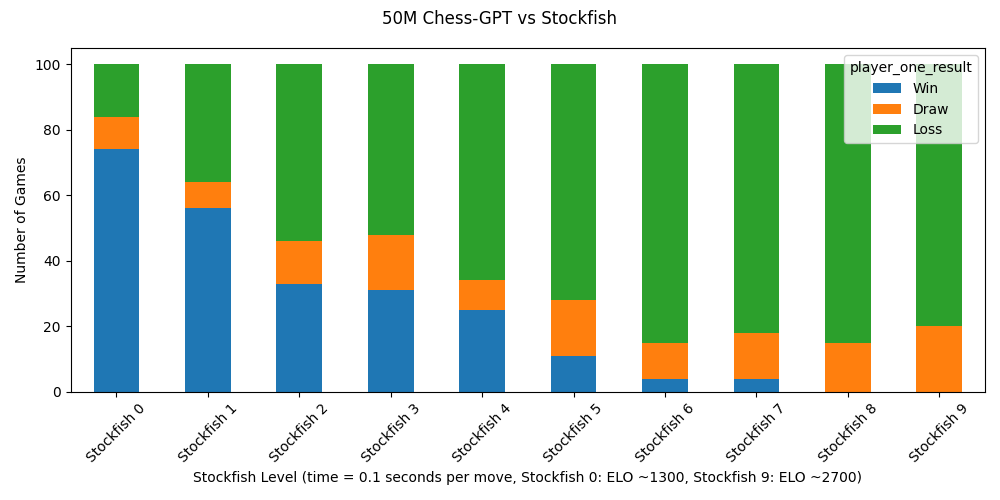

1·1 year agoMira: So why is “gpt-3.5-turbo-instruct” so much better than GPT-4 at chess? Probably because 6 months ago, someone checked in a chess eval in OpenAI’s evals repo.

I think this is silly. The eval tests it on 101 chess positions. That’s fine-tuning, not training.

2·1 year ago

2·1 year agoI’d assume at some point the problem becomes more the memory bandwidth.

1·1 year ago

1·1 year agoThe AI race is entirely perpetuated by people who wish really hard they were helpless victims of the AI race so they could be excused for continuously perpetuating it in the pursuit of cool demos. Unfortunately, it just isn’t the case. OpenAI to their credit seem to have realized this, hence them not working on GPT-5 yet. - You can see the mask come off on this in Nadella’s “we made them dance” address, where it’s very clear that AI risk simply is not at all a salient danger to them. Everything else is just whining. They should just come out and say “We could stop any time we want, we just don’t want to.” Then at least they’d be honest.

Meta, to their begrudging credit, is pretty close to this. They’re making things worse and worse for safety work, but they’re not doing it out of some “well we had to or bla bla” victim mindset.

Everyone signs the letter, nobody builds the AI, then we find out in ten years that the CIA was building it this entire time with NSA assistance, it gets loose, and everyone gets predator droned before the paperclip maximizer machines ever get going.

You know what happened in scenario 5? We got ten years for alignment. Still pretty impossible, but hey, maybe the horse will sing.

Now, there are plausible takes for going as fast as possible - I am partial to “we should make unaligned AI now because it’ll never be this weak again, and maybe with a defeatable ASI we can get a proper Butlerian Jihad going” - but this ain’t it chief.

Wonder who at NIST is actually going to leave over this (if any).

As a doomer, honestly I can never parse if this sort of thing is “AI ESG trying to defect against us even though we’ve been trying to play nice with them very hard” or “AI accelerationists trying to drive a wedge into AI safety.”

In other words, “big, if true”.

Okay I take it back, this is … wait, she’s not even defecting, she’s just shitting on him randomly for lolz. Destroy Twitter when?

Well let’s all hope he’s right about that.