Using a tool like this to hide sections of code presented for review places a lot of trust in the automation. If Mallory were to discover a blind spot in the semantic diff logic, she could slip in a small change for eventual use in an exploit, and it would never be seen by another human.

For example, consider this part of the exploit used in the recent xz backdoor. In case you don’t see the problem, here’s the fix.

Rather than hiding code from review, if a tool figured out a way to use semantic understanding to highlight code that might be overlooked by a human (and should therefore be reviewed more carefully), it could conceivably help find such things.

I don’t have an opinion on the topic but I see a blind spot in your argument, so I have to be that kind of person … 🥺

One could use the exact same example to argue that humans are very bad at parsing code (especially if whitespace kicks in). In that regard a tool that allows them to reason on a standardized representation of the AST can be a protection against a whole class of attacks.

That’s not a blind spot in my comment. See my final paragraph.

It’s only one sentence. Maybe it was easy to miss. :)

I like the idea, but I can’t come up with any method that won’t devolve into most reviewers only checking the highlighted parts tbh.

Oh yeah, so I’m that other kind of guy 🥺

I kinda like your idea, but I think it can be difficult to detect some confusing situations. I think it would be a better idea, but I don’t think it’s a full replacement.

If Mallory were to discover a blind spot in the semantic diff logic

This is a very big stretch IMO. That xz change wasn’t actually the exploit, it was just used to make the exploit less detectable. And it was added by people with commit access so it didn’t even have to go through code review.

On top of that, code review is not magic. It’s easy to get bugs past it hiding in plain sight (if that wasn’t the case Linux would be bug free!).

Can you think of an actually realistic example?

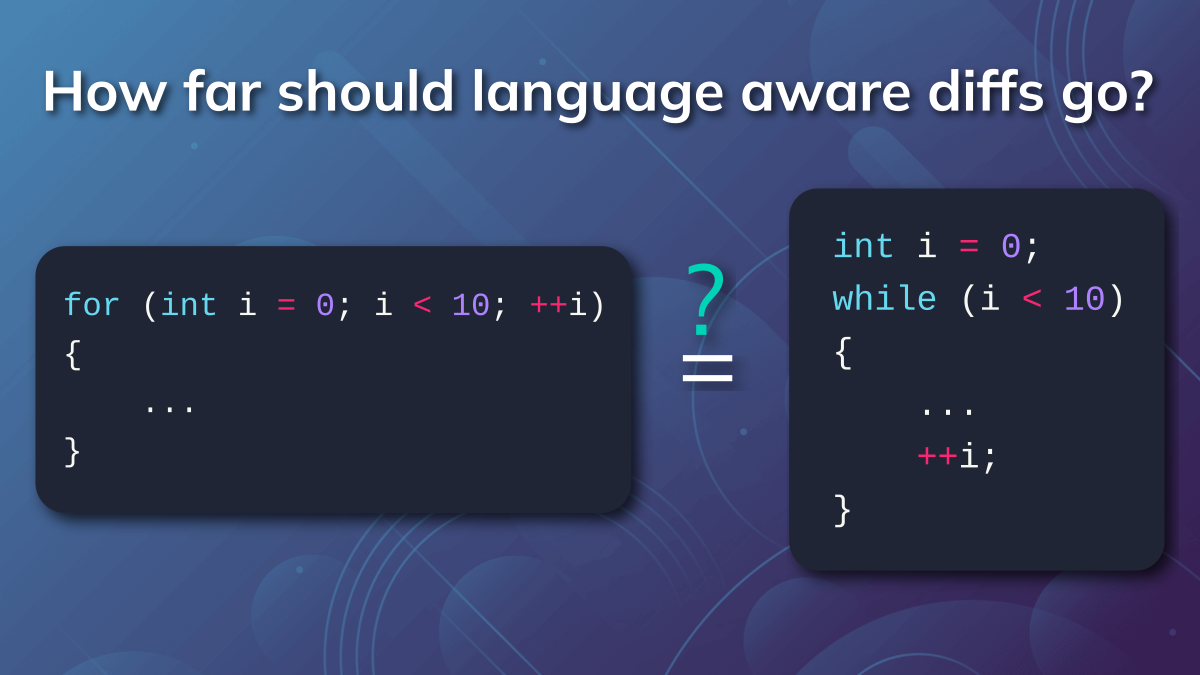

Interesting question. I’d be comfortable up to level 2 in this list, after which I want to have my eyes on the changes. Even where code is functionally or semantically equivalent, style can make a lot of difference for comprehension and maintainability.

I’d agree, for the same reasons. Communicating intent is definitely one of the main things that separates mediocre from amazing developers (and software can’t check that).

It’s interesting to consider a tool that does all of levels 1-3 (and more) as a way to verify that a style refactoring hasn’t changed logic. I assume that’s what they meant when they wrote “modifications that were supposed to be no-ops but aren’t”.

I was into this until I realized that it’s not open source and not even available outside of vscode and GitHub web

I’ll opt for “Level 0”.

Unless you’re just doing a diff for personal code or something you should be reviewing everything a developer has done. Yes whitespace changes too.

4 is sheer madness. 1 is common sense. 2 is just the cooler version of 1.

I’ve always found hardcoded style to be an obnoxious and counterproductive paradigm. It’s the text editor’s job to handle line wrapping, and there’s no reason a coding editor shouldn’t be able to format code intelligently. I hate hard line breaks that do not have meaning. Not everybody is using the same size windows! It’s 2024! We have the technology!

The example for 2 isn’t good. Seemingly superfluous commas, brackets, and escaped newlines can be useful and even important for clean maintenance.

The solution to the whitespace gripe is strictly enforced formatting standards with a git hook running a manually invokable script.

Yeah but sometimes you do get meaningless changes that aren’t just whitespace even with auto formatters. For example if you change the indentation on some code and that causes it to wrap an expression.

How is that not whitespace?

git diff -wonly ignores whitespace within a line (e.g. changing indentation). It doesn’t ignore adding or removing new lines.But even if it did, wrapping a function call or a long string can introduce extra commas or quotes.

The solution to the whitespace gripe is strictly enforced formatting standards with a git hook running a manually invokable script.

Throwing a linter into the pipeline just hardcodes the formatting at that point in the pipeline. That doesn’t really solve the issue, which is that style is not a one-size-fits-all concept, and displaying text appropriately is really the job of a text editor. To quote PEP 8, “default wrapping in most tools disrupts the visual structure of the code”. In other words, “most tools” suck at displaying code, because they are not language-aware. That’s the real problem. Hardcoding style is a workaround, not a solution.

That said, I wouldn’t consider intelligent editors to be a replacement for formatting standards, either. Ideally my text editor would display my Python code the way I like it, and then save to disk in accordance to PEP 8.

Why would there be one answer to this? I’d probably use all the available levels depending on the situation, in the same way I’d use

--word-diffor-bingitwhen I need help understanding a complex change.It really depends. Whitespaces are something most languages don’t care. The only people who care are enforcing style guides. Level 2 is the same but there it start to get more critical, because can you be sure that it makes no difference? Level 3 is critical. While it can help to eliminate code that probably didn’t caused the problem, it makes a difference. In code review this can make a difference. If a specific Hex number is well known, like of example 0x4711 and someone changes it to 18193 or even Binary, information to the programmer gets hidden. And even in style this makes a difference. When you have a flag Enum, the thing to use is binary or bit shift, because both is readable. Decimal is readable to a certain point. 4 bytes is fine but at the 5th I don’t know them by heart and can’t even spot them. Level 4 is irrelevant, when its on top of the file and bothering to hide it, is not necessary. Also this can be relevant. For example a while ago at our company we had code that needed to work with .NET 2 and we had parts with .NET 4 and at some point, new files had the using for LINQ, that isn’t available in .NET 2. This happened a lot.

The best solution is to have options and let the person using it decide. What I’m missing is to add my own ignore list. For example with our XML files, we have a date in them. The XML Class is badly written, because instead of having one date attribute for the first node, we have them on all. This is pretty irrelevant to show in a diff, because its not even used. Rewriting the Class is a big task, because its a core feature and can break everything, when one thing is missed.

I strongly and broadly disagree, both with the premise and the argumentation they do.

But first off, and because it’s significant to the argumentation; Why is their entire argumentation in line-based diffs, but when I go to their homepage I see screenshots of inline diffs?

Inline-marking of differences are incredibly powerful. Programming language aware diffing should make use of understanding to support the highlighting, with the option to ignore different levels of irrelevance at the users choice.

I don’t want anything hidden. I want to default to every difference shown, but put side by side as much as possible, with different levels of highlighting of the differences according to their relevance and significance.

I want to default to every difference shown, but have alternative options to ignore things. I want to have the option to ignore whitespace changes, and potentially additional options to ignore more (this is the opportunity semantic understanding could bring, in addition to the line and change matching).

TortoiseGitMerge allows me to choose between

- Compare whitespace

- Ignore whitespace changes

- Ignore all whitespace changes

I default to the first, but regularly switch to the second and third, when indents change. They are irrelevant when assessing the logical changes. But whitespace is still significant in laying out code. It’s both significant, but for different reasons, and as different layers and layers of assessment.

All that being said, we don’t use an enforced automatic formatter.

But also, we use whitespace, line breaks, and other syntax consciously. Readability takes precedence over consistency. I hate hard character limits on lines. Sometimes the vertical structure is much more significant to readability than an arbitrary (and often narrow) line character limit.

One example:

Do you write

if (error != null) return error;or do you write

if (error != null) return error;or do you write

if (error != null) { return error; }I dislike the second because its semantic structure not very obvious and somewhat error prone. For simple early returns like this, I prefer the first. But in cases where it’s not a simple and short return, the third can be preferable despite being only one statement.

Automated formatters often can’t do one or the other alternatively, and sometimes don’t allow ignoring one rule.

I’m OK with level 3 for small teams. The reasoning is that, if someone changed it to a semantically equivalent block, they had a reason to do so and putting effort reviewing semantically equivalent things is a bit of a waste.

Assuming it’s C/C++, the for and the while loops are not equivalent: the scope of the variable i is not the same.

I am very content with Riders “hide whitespace and newlines” diff option. Frankly after starting to use auto format on code, all old files that got messy in the diffs next time they were changed.

There’s some other nitpicks that some more aware diff could have but outside python few changes in whitespace matters, so seeing every new line is a waste and visual burden in any review for me.