I’ve recently seen a trend in tech communities on lemmy where people have developed this mentality that computer hardware is as disposable as a compostable cup, and that after 10-15 years you should just chuck it in the bin and get something new. If someone asks for tech support, they’ll just be told to buy new hardware. If someone is saddened their hardware is no longer supported by software they are just entitled, need to pull up their bootstraps, and “only” spend $100 to get something used that will also not be supported in 5 years. It doesn’t matter if there is actual information out there that’ll help them either. If the hardware is old, people will unanimously decide that nothing can be done.

I’ve seen this even in linux communities, what happened to people giving a damn about e-waste? Why is the solution always to just throw money at the problem? It’s infuriating. I’ve half a mind to just block every tech/software community other than the ones on hexbear at this point.

its crazy that we treat the most unbelievably advanced machines most people will ever have direct access to as completely disposable

Well that’s certainly part of why they are disposable, it’s much harder to repair and reuse

ive never considered that but you’re very right

Outside of gaming the requirement for new hardware literally only seems to cover the endless abstraction slop that businesses use to push out more features faster. Meanwhile the product I’m using hasn’t changed at all in the last 10 years, continues to have the same bugs yet now requires an nvme ssd and 500 core 800 watt cpu to run to deliver the same functionality as 10 years ago.

I can’t think of a single thing literally ANY of the software that I use has changed in any meaningful way since the 90s other than getting bigger and slower.

Actually games aren’t even immune from this Unreal Engine is prime abstraction slop because shitting out crap matters more than making anything good. God forbid anyone get the time to make their own engines no just ram a barely working open world game into an engine that barely supports rendering more than 3 people on the screen without shitting the bed.

Yes mr nvidia sir i will buy you 1000 watt electric heater to run this mid ass game that hasn’t innovated on shit all since the ps2 era. Ray tracing you say? Wow this really enhances my experience of point to point repetitive collect 20 boar arse mmo ass gameplay ass shit with AI that’s still as rudimentary as guards in hitman 2 silent assassin

Browsers have gone from document renderers to virtual machine app platforms over the last 30 years. It’s a significant change, even if mostly not good.

And yet my use of the internet hasn’t changed, gamefaqs, blogs, forums and YouTube. All stuff that rendered just fine before I needed to be shipped 900gb of react bollocks. My desire for web apps is in the negative they almost always feel worse than native applications but those are a dead art.

Nothing browsers have done can I point to having improved my life and nothing that has been done couldn’t already be done better elsewhere.

Full agreement from me.

I can’t think of a single thing literally ANY of the software that I use has changed in any meaningful way since the 90s other than getting bigger and slower.

Lotus notes got basically killed so that’s good

New outlook is faster and more reliable at the cost of crippled or removed basic

functionalityWe have good videoconferencing now which we definitely couldn’t do before. Miss me with that Teams bullshit, but hit me with that group family Facetime.

Miss me with the endless proliferation of locked down messaging, audio and visual software though

With proprietary software in general, really.

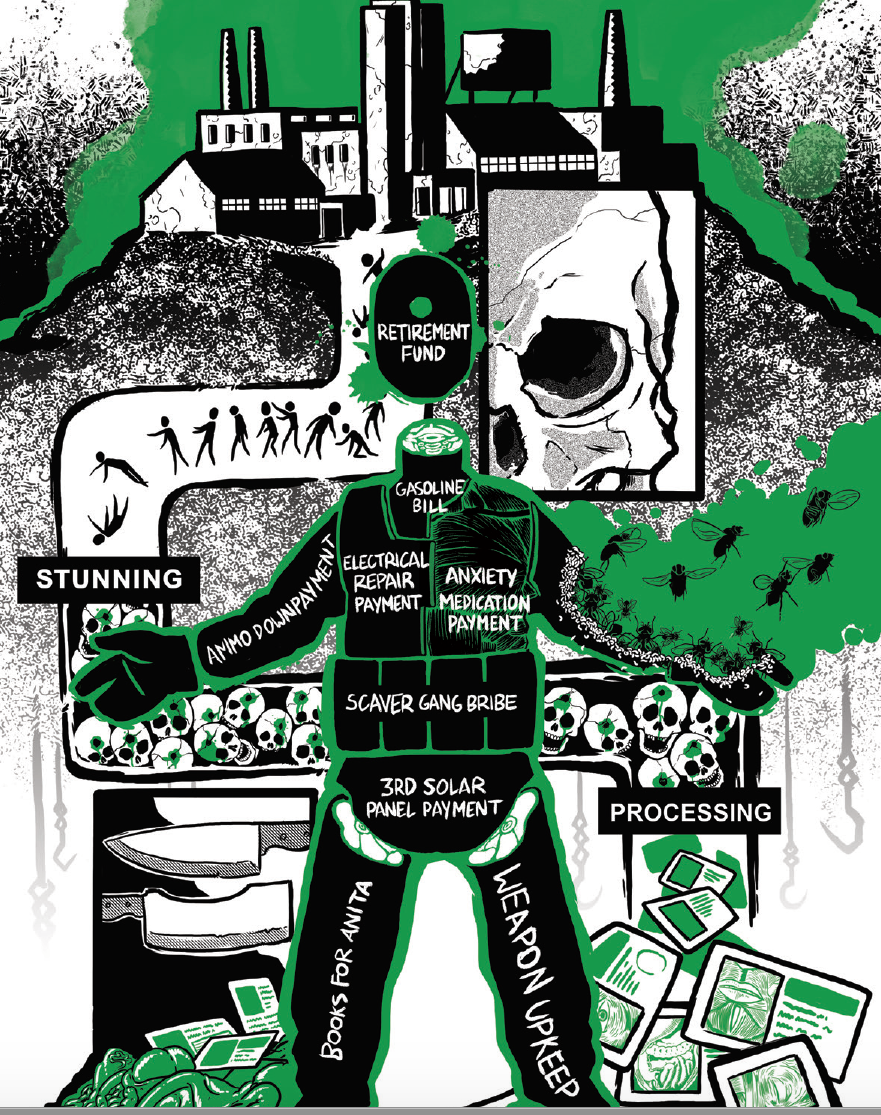

$250 ish not far from the median monthly wage in my country. i always cringe when they say this.

It really is the intersection of capitalism that makes this so difficult. Really many computer users in the global south have much older hardware than is available in the imperial core, but a majority of development happens in the imperial core in terms of software maintenance and so there’s a purposeful disconnect between manufacturers and users.

Libre operating systems have been a lot better at this, but we see the shortage of labor and expertise a lot and when hardware companies make bone headed moves like nvidia restricting their newer drivers to newer cards there’s really nothing we can do on our side besides keep packaging legacy drivers.

It doesnt help that newer computer products have become more hostile to user repairs and have built in lifespans with soldered SSDs.

32 Bit computers are going to have to go away at some point though due to the 2038 problem (unless the intention is to not ever connect to the Internet on those devices anymore). So at the very least its a legimitimate hardware limitation.

time_t on 32-bit Linux has been 64 bits for over a decade, I think. The real fear is Linux dropping 32 bit support, which would turn 32 bit PCs into pure retrocomputing devices. The last new 32 bit Intel processors were Pentium 4s from 2008 and I think some Atoms up to 2010.

It’s very deliberate and forced, the software side justifications for requiring mid-to-high tier hardware produced in the last 5-6 years are incredibly weak.

Entirely related, hardware from said time period also suffers increasingly from planned obsolescence and expensive upselling.

Videogames and their communities are by far the worst offenders. Had a friend see me playing a game on my laptop at 720p resolution the other day and they pretended to be offended by it. “that’s unplayable!” Like, says who? Tech influencers paid to set your expectations too high?

ive been this friend tbh, and for good reason. a friend of mine uses an old m1 macbook to play everything and she will sometimes complain about input lag. i tried her setup and no joke, it had about 50-80ms of extra input delay when running rhythm games through ARM wine. it was actually comical and a bit silly.

Oh, that’d drive me nuts. I’ve got like one game that won’t run well on my Linux system, and it’s like… not even WINE’s fault. That game is notoriously laggy on Windows, too. It’s not a hardware limitation, better hardware would do literally nothing to speed it up, I know because it runs exactly as shit on my nice few years old system as it did on my older-than-me shitbox when I was 10. I’ve tried running it with all the graphics options at their lowest, it helps not at all or an imperceptibly small amount. So all better hardware’s gotten me there is that now the cats and dogs in the game look nice and fluffy and huggable, and the water in the world map is reflective.

Ngl, if you have computer hardware 10-15 years old, be prepared to replace it anyway, that’s about the longest I’ve kept a GPU alive. Though I do otherwise agree. I have an AMD rx480 that is a perfectly serviceable card, except that AMD dropped support for it several years ago. Moreover, it’s been 10 years and AMDs drivers are still ass. Every year I go ‘oh, this will be the year of the AMD GPU’ and every year I am shockingly wrong. My Nvidia rtx3060 is starting to show it’s age a bit. Not too much, but enough that I am considering an upgrade. I wouldn’t care so much of I could use the 480 for compute but nope.

The 30 series cards still support 32 bit phys-x, so if you get a newer one you can still use your old one to run mirrors edge glass etc. the nvidia drivers even support splitting certain loads off onto certain cards…

Good call out, I hadn’t considered that

This is way worse with

. A few years is ancient to a lot of them to play some unoptimized slop made with unreal engine on the lowest settings.

. A few years is ancient to a lot of them to play some unoptimized slop made with unreal engine on the lowest settings.ue5 is the bane of gaming to me. there’s not a single ue5 game that runs well. the entire engine is nothing but diminishing gains. “here we made lighting look slightly better but you need 16gb of VRAM and upscaling to make it work even with this setting off anyway”

i see people on reddit talking about gpus like anything that isnt the top top top of the line is “mid tier” and shouldn’t expect good frame rates at high settings.

any thing that isn’t top of the line is “mid tier”

A lot of that is that nvidia, in addition to jacking the prices to the moon, changed the way their skus are lined up. Instead of the old way where the top of the line gpu such as the titan or xx90 was a lot more money for a small performance increase, the top card is miles ahead of the one below it. The difference between the 5090 and the 5080 is about the same as the 1080 ti and the 1070.

nvidia cards are insanely overpriced. very true. i think my next card will be intel. i have amd currently and many games ive wanted to play just dont work well with amd gpus for some reason. for example the 2014 wolfenstein or the first evil within.

I’d expect even more driver problems with intel to be honest with how late they are to the game, especially with older games like that. They’re definitely priced more competitively though. I’m just begging for the AI bubble to pop already

as far as ive heard intel had terrible driver support up til recently and now the drivers are good. i was actually gonna shell out more for a less powerful nvidia card myself til i heard intel drivers have gotten more usable. maybe you’re right tho and they’re still bad. its also tempting to just buy an old 1080ti for cheap. i only play at 1080p so it should be good for a while but with driver support ending soon it’s probably not worth it

They’ve definitely improved substantially very quickly as I understand it I just don’t know if they’d be at AMD’s level yet, especially with older games that came out before Intel had dgpus. I didn’t have too many issues back when I had an rx480 myself though so maybe they’ve gotten worse.

I hate UE5, but so far STALKER 2 is the only game I haven’t been able to get running in an acceptable manner. Stutters and all that shit persists in UE5 no matter what I do, but with STALKER, even if I accept a (barely) consistent 30fps, its still a blurry, ugly mess. Granted I know they patched it a couple times since launch, but as far as I’m concerned the game isn’t even coming out for another year. Maybe by then they’ll get the fuzz off the trees.

thats not one i’ve tried personally but yeah thats most of what i hear about it. more than “finally a new stalker game! its awesome!” its just people complaining that it runs like shit because the engine sucks. apparently if you can get beyond that there’s fun to he had tho

I put about 20 hours into it and then there were like 4 game breaking bugs that there was no workaround for. Not reinstalling until the game is, you know. Finished.

To play devils advocate, it can be really hard to maintain an ever increasing list of hardware to support. Especially when new hardware have new features that then require special care to handle between devices, and further segment feature sets.

To support every device that came before it, also requires devs to have said hardware to test with, lest they release a buggy build and get more complaints. If devs are already strapped for time on the new and currently supported devices, spending any more time to ensure compatibility with a 10-15 year old piece of hardware that has a couple dozen active users is probably off the table.

See 32bit support for Linux. I get why they’re dropping support but I also don’t like it. In Linux, there’s basically a parallel set of libraries for i386. These libraries need maintenance and testing to ensure they’re not creating vulnerabilities. As the kernel grows, it essentially doubles any work to ensure things build and behave correctly for old CPUs, CPUs that may lack a lot of hardware features that have come to be expected today.

People also will like to say “oh but xyz runs just as good/bad as my 15-20 year old computer, why do we need NEW???”. Power, it’s power use. The amount of compute you get for the amount of power for modern chipsets is incomparable. Using old computers for menial tasks is essentially leaving a toaster oven running to heat your room.

Yeah, this really seems like a problem inherent to capitalist production. New hardware doesn’t need to release every year. We could cut back on a lot of waste if R&D took longer and was geared toward longevity and repairing rather than replacing. Unfortunately, all the systems of production were set up when Moor’s Law was still in full swing, so instead we’re left with an overcapacity of production while at the same time approaching the floor component miniaturization as Newton gives way to Schrödinger.

I agree with you completely.

I do want to add that, I’d wager this overall scenario would be present regardless how long the release schedules are. Especially since people are wanting to continue to use their 10-15 year old hardware.

It’s somewhat frustrating that many useful features are also in opposition to repair-ability. Most performance improvements we’ve seen in the last 10 years basically require components to get closer and closer. Soldered RAM isn’t just the musings of a madman at Apple, it’s the only way to control noise on the wires to get the speeds the hardware is capable of. Interchangeable parts are nice but every modular component adds a failure point.

Add in things like say, a level of water resistance. Makes the device way more durable to daily life at the expense of difficult repairs. Or security, it’s great grandma won’t get her phone hacked but now we can’t run our own code.

There’s also a bit of an internet-commie brainworm that I’m still trying to pin down. Like you said, “We could cut back on a lot of waste if R&D took longer and was geared toward longevity and repairing rather than replacing”, what does this actually look like? I think it’s at odds with how most of us use technology. Do we want somewhat delicate, fully modular, bulky devices? What does it mean to be repairable if the entire main-board is a unit? If you need to make each component of the main board modular, the device will quadruple in size, making it overall worse to use than the small disposable device(more expensive too). The interconnects will wear out, making modules behave in unexpected ways. The level of effort required to support a dozen interconnected modules for years and years would be substantial. Not only that, the level of expertise to repair things at a level below a plug-and-play module is far higher.

I had a small wireless dongle die on me after about a year of use. It basically stopped connecting to my phone. I noticed that it would connect for a short period before disconnecting. When the device hadn’t been used in a while, this period was longer. Due to my own repair experience, I knew this was a product of a cracked solder joint and expansion due to heat. I brought it into work and held a reflow gun to it for 5 or so minutes, heating up the PCB enough to reflow whatever bad joint was causing the issue. I looked this up after the fact, and found another person had found the same solution, basically told people to put the whole device in the oven for a period and hope it fixed it. People commented in disbelief that this seemingly magical ritual could revive such a black-hole of a device. They couldn’t comprehend how someone could come across this solution. Of course it wasn’t magic, it was fairly simple if you have encountered thermal expansion, cracked solder joints, and misbehaving electronics in the past. The point of this story is that, had this not been the solution, the device would have been e-waste, more so than it already is because even the most simple repair was magic to people unconcerned with technology. I’ve dedicated my life to technology and engineering, and even then I’m basically an idiot when it comes to a lot of things. Most people are idiots at most things and good at a couple things.

I understand people are upset when their technology stops working. It stops working because the people who are experts in it, don’t have the time or funding to keep it working, and the people who want it to work don’t understand how it works in the first place, because if they did and really wanted their old hardware working, they’d develop a driver for it. People do this all the time, and it takes months if not years of effort from a handful of contributors to even begin to match what a well funded team of experts can get done when it’s their day job.

There’s a fundamental disconnect between those who use and purchase technology and those who make it. The ones who make it are experts in tech and idiots at most things, and the ones who use it are likely experts in many things but idiots with tech, or simply have a day job they need to survive and don’t have the time to reverse engineer these systems.

Even in an ideal communist state, resources need allocation, and the allocation would likely still trend towards supporting the latest version of things that currently have the tooling and resources, who’s power consumption is lower and speed higher. We might get longer lifespans out of things if we don’t require constant improvement to serve more ads, collect more data, and serve HD videos of people falling off things, but the new technology will always come around, making the old obsolete.

There’s also a bit of an internet-commie brainworm that I’m still trying to pin down.

Your post is great & I love it in its entirety but I think this part kinda boils down to people thinking everything is capitalism’s fault and sometimes things are just exacerbated by capitalism. As you acknowledge later, there are real challenges with developing and implementing technology not that it couldn’t be done in a more responsible way and I think many people not in technical fields get jaded and stop believing this.

It’s just the nature of technology that the more advance it is, the harder it is to actually repair it.

Repairing pre-19th century tech (ie a shovel or a blanket or a wooden chair) is trivial because devices made with pre-19th century tech don’t have some crazy demand for precision. A shovel will still be useful as a shovel even if the handle is an inch wider than the actual specs. It doesn’t matter that the wooden leg you’ve replaced with isn’t the exact same as the other three legs.

Repairing some 19th century tech like mechanical alarm clocks isn’t hard either and is more than doable for hobbyists if they have access to machinist equipment like lathe machines and drill presses. You could use lathe machines to make your own screws, for example. CNC machines open up a lot of possibilities.

Repairing 1950s-1980s commercial electronics like ham radios becomes harder in the sense that you can’t just make your own electrical components but have to buy them from a store. Repairing is being reduced to merely swapping parts instead of making your own parts to replace defective parts. But as far as swapping out defective components, it’s not particularly hard. You basically just need a soldering iron. As far as how precise the components have to be, plenty of resistors had 20% tolerance. The commercial ham radio isn’t build with parts that have <0.1% tolerance.

By the time you get to modern PCs, you mostly don’t have the ability to truly repair them. You can swap out parts, but it’s not like 1980s electronics where “parts” mean an individual capacitor or an individual transistor. Now “parts” mean the PSU or the motherboard or the CPU. People with defective radios can troubleshoot and pinpoint the components that fail while people with defective motherboard at best sniff at it to see if parts of it smelled burnt and look for bulging capacitors.

The only parts of a modern PC that you can still repair are the PSU, the chassis, and various fans. Everything else is just “it stopped working, so I’m going to order new parts on Amazon and throw the old part away.” It’s a far cry from a wooden chair where everything from the seat to the legs to the upholstery to the nails can be replaced.

I think people who are into computers don’t really understand to the extend in which computers aren’t really repairable relative to purely mechanical devices. “Do not panic because we can always make our own parts” which is present within hobbyist machinists is completely absent in computer enthusiasts.

It takes a real long time for the inefficiency of an old computer to add up to the embodied energy cost of a new computer, though.

also true

I have (for the last 24 hours) heard so many people say linux is dropping 32 bit support.

Some (most) distros have dropped support for 32 bit, and firefox stopped providing a 32 bit version for linux, but the kernel still very much supports 32bit. I believe there recently were some talks of cutting out some niche functionality for certain 32bit processors, but I’ve not heard anything about actually gutting 32bit support from the kernel.

Idk, I’m probably too invested in this. Internets got me going nuts. I should prolly touch grass.

FWIW this isnt hapenning right now. There is a gradual reorganization where 32bit i686 packages are being isolated. WoW Wine allows for emulating 32 bit packages using only 64 bit systems for example. Fedora will probably be the one to do this first, but this change is contingent on Steam (which is a 32 bit application on GNU and Windows).

There will always be specialized distributions that will always cater to 32 bit systems though. Also Debian is probably never going to drop i686 until it physically stops compiling.

Well, that’s Debian for you. The whole point of it is that it’ll run in situ forever.

I think there was some news recently about maintainers wanting to call 32bit support over. That might be why a lot of folks are talking about it.

Yep, its never just “upgrade your gpu”. That’s one bit. But for the new gpu to work, I’ve gotta get a new mobo, and the new mobo needs new ram and a new cpu. Basically replacing everything but the damn case, and sometimes that’s gotta go too.

It’s honestly a miracle any of this shit worked in the first place.

I recently got a deal on RAM, which I later realized required a new motherboard, which I later realized had wifi-7, which required forcefully updating my fine Windows-10 install to the yucky windows 11. Awful all around. Linux was fine but goddamn I bought the ram to improve some games.

Windows sucks. It’s always sucked. But it definitely used to suck way less.

And, like, Linux is annoying in some ways too. I just personally hate it less.

I feel like the GPU slot on a mobo (pcie x16) hasn’t changed since I started building PCs in the early 2000s? I got the sense AGP was already mostly phased out by then.

Obviously you couldn’t take advantage of the full speed of current cards with something that old but it should work no?

The physical slot hasn’t, but depending on the age, size, and layout, getting a big new beefy triple slot gpu is gonna be tight, to say the least.

I used to game on a very similar GPU (a 9600 gt as well I believe). It was more than adequate for the quake 3 arena and unreal tournament clones I played.

I think a 9600 would run Crysis decently

IMO, this is less of a problem with the attitude of people and more of a problem of the logic of the whole computing sector. The idea that old parts will be deprecated in the future isn’t just accepted, it’s desired. The sector’s goal is the fastest possible development, and development does happen. A graphics card from 15 years ago is, for all intents and purposes, a piece of electronic junk, 10 times slower and 5 times less energy efficient. This has to do with the extremely fast development of computer parts, which is the paradigm of the field.

In order to maintain 10-15 year-old parts, you’d have to stop or to slow the development speed of the sector. There are arguments to be made for that, especially from an environmental point of view, but as the sector is, you simply cannot expect there to be support for something working 10 times slower than contemporary counterparts.

Hardware just gets better really quickly, GPU’s have been roughly doubling in power every two generations. Why wouldn’t developers take advantage of that to make games that look amazing?

Honestly though, it’s mostly just a gaming problem. I have, among other computers, a 16 year old laptop that does perfectly fine for everything that isn’t running modern videogames (and even then I can still run older titles). Sure, 4GB RAM means I sometimes have to do things one at a time, but old hardware works surprisingly well. x86_64 came out 22 years ago, so if anything it’s surprising that so much is only dropping 32 bit support now.

Phones, on the other hand, are a much more abysmal landscape than computers. A lot of Androids will only get like 3 years of updates, and even the longer supported phones like iPhones and Google Pixels will get a mere 6-8 years of updates.

I’m impressed your laptop is in such great shape. I had a decently high end Dell in 2010 that by 2018 could barely browse the web, overheated without a fan underneath, and had a battery that wouldn’t hold a percentage of charge.

Tbh it was pretty high end back in it’s day, and it’s had it’s battery replaced.

For phones, I think everybody should try to buy phones that get community support. I used to have a Galaxy S2 that would always get the newest version of Android because someone on XDA just made it happen. I had a OnePlus 6T for the longest time and the charging port went out before the community support was over. Shit, I’m sure there are still people developing for the 6T

I had a OnePlus 6T for the longest time

Literally me. I only switched to a Pixel (w/ GrapheneOS) once DivestOS (imo the best OP6T ROM) shut down.

10-15 years

Try 10-15 months

God, is it really that bad these days?

I should start posting screenshots of unreal engine games @640x480 70% resolution scale that I’ve tweaked into ultra potato mode, only to be able to play them at 24fps. 😅 I enjoy them too!

At the end of the day, it takes a lot of effort to keep hardware device drivers up-to-date to work with newer software. Of course hardware should be designed for long term support, and at least have enough documentation to make that possible. But it’s also hard when there aren’t many people in the “community” who even have certain hardware to test out. And it takes work to even support any given hardware device in the first place, especially without documentation. And if you’re talking about graphics cards specifically, they’re really complex compared to most hardware devices, and have changed a lot over the last 10-20 years. But with Linux, there just isn’t the necessary labor to support so much stuff.

I understand at some point, certain hardware just has to be abandoned because next to nobody is using it.

My issue is more that on a cultural level people are just a tad too quick to tell somebody they are SOL and that they should buy new hardware.

My issue is more that on a cultural level people are just a tad too quick to tell somebody they are SOL and that they should buy new hardware.

In many cases it’s just the truth though. It’s the reality that Silicon Valley created for us. Yes it fucking sucks, but it’s capitalism of course it does.

My first server was setup on decade old hardware and ran like a champ. Old hardware is great if your don’t get hung up over graphs trying to tell you it’s not

I’m still using an amd 390x, which admittedly is almost 10 years old, but it still runs most almost-modern stuff pretty well. But I have started to run into a few games where it just straight up won’t play the game at all because it doesn’t support dx12 or something (looking at you, marvel rivals

) . Stopped getting updated drivers like 5 years back lol.

) . Stopped getting updated drivers like 5 years back lol.