Need to let loose a primal scream without collecting footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this.)

Deep thinker asks why?

Thus spoketh the Yud: “The weird part is that DOGE is happening 0.5-2 years before the point where you actually could get an AGI cluster to go in and judge every molecule of government. Out of all the American generations, why is this happening now, that bare bit too early?”

Yud, you sweet naive smol uwu baby

esianboi, how gullible do you have to be to believe that a) tminus 6 months to AGI kek (do people track these dog shit predictions?) b) the purpose of DOGE is just accountability and definitely not the weaponized manifestation of techno oligarchy ripping apart our society for the copper wiring in the walls?bahahahaha “judge every molecule.” I can’t believe I ever took this guy even slightly seriously.

I swear these dudes really need to supplement their Ayn Rand with some Terry Pratchett…

“All right," said Susan. “I’m not stupid. You’re saying humans need… fantasies to make life bearable.”

REALLY? AS IF IT WAS SOME KIND OF PINK PILL? NO. HUMANS NEED FANTASY TO BE HUMAN. TO BE THE PLACE WHERE THE FALLING ANGEL MEETS THE RISING APE.

“Tooth fairies? Hogfathers? Little—”

YES. AS PRACTICE. YOU HAVE TO START OUT LEARNING TO BELIEVE THE LITTLE LIES.

“So we can believe the big ones?”

YES. JUSTICE. MERCY. DUTY. THAT SORT OF THING.

“They’re not the same at all!”

YOU THINK SO? THEN TAKE THE UNIVERSE AND GRIND IT DOWN TO THE FINEST POWDER AND SIEVE IT THROUGH THE FINEST SIEVE AND THEN SHOW ME ONE ATOM OF JUSTICE, ONE MOLECULE OF MERCY. AND YET—Death waved a hand. AND YET YOU ACT AS IF THERE IS SOME IDEAL ORDER IN THE WORLD, AS IF THERE IS SOME…SOME RIGHTNESS IN THE UNIVERSE BY WHICH IT MAY BE JUDGED.

“Yes, but people have got to believe that, or what’s the point—”

MY POINT EXACTLY.”

– Hogfather

The worst part is I can’t tell if that’s not meant to be taken literally or if it is.

He retweeted somebody saying this:

The cheat code to reading Yudkowsky- at least if you’re not doing death-of-the-author stuff- is that he believes the AI doom stuff with completely literal sincerity. To borrow Orwell’s formulation, he believes in it the way he believes in China.

That thread is quite something, going from “yud is extraordinarily thorough (much more thorough than i could possibly be) in examining the ground directly below a streetlamp, in his search for his keys”, that ‘he believes it like he believes in China’ to ‘honestly, i should be reading him. we have starkly different spiritual premises- and i smugly presume my spiritual premises are informed by better epistemology’

that gives me questions about China

Yud be like: "kek you absolute rubes. ofc I simply meant AI would be like a super accountant. I didn’t literally mean it would be able to analyze gov’t waste from studying the flow of matter at the molecular level… heh, I was just kidding… unless 🥺 ? "

“judge every molecule” and “simulation hypothesis” probably have a bit of a fling going

“The AI is attuned to every molecular vibration and can reconstruct you by extrapolation from a piece of fairy cake” is a necessary premise of the Basilisk that they’ve spent all that time saying they don’t believe in.

Quantum computing will enable the AGI to entangle with all surrounding molecular vibrations! I saw another press release today

ah, the novel QC RSA attack: shaking the algorithm so much it gets annoyed and gives up the plaintext out of desperation

An extreme Boss Baby tweet.

https://mastodon.gamedev.place/@lritter/114001505488538547

master: welcome to my Smart Home

student: wow. how is the light controlled?

master: with this on-off switch

student: i don’t see a motor to close the blinds

master: there is none

student: where is the server located?

master: it is not needed

student: excuse me but what is “Smart” about all of this?

master: everything.

in this moment, the student was enlightened

The New York Times Pitchbot enters our territory:

We wanted to understand the future of AI. So we talked to three Hawk Tuah cryptocurrency investors at a White Castle in Toms River.

Kelsey Piper continues to bluecheck:

What would some good unifying demands be for a hostile takeover of the Democratic party by centrists/moderates?

As opposed to the spineless collaborators who run it now?

We should make acquiring ID documents free and incredibly easy and straightforward and then impose voter ID laws, paper ballots and ballot security improvements along with an expansion of polling places so everyone participates but we lay the ‘was it a fair election’ qs to rest.

Presuming that Republicans ever asked “was it a fair election?!” in good faith, like a true jabroni.

unifying demands

hostile takeover

Pick one, you can’t have both.

What would some good unifying demands be for a hostile takeover of the Democratic party by centrists/moderates?

me, taking this at face value, and understanding the political stances of the democrats, and going by my definition of centrist/moderate that is more correct than whatever the hell Kelsey Piper thinks it means: Oh, this would actually push the democrats left.

Anyway, jesus christ I regret clicking on that name and reading. How the fuck is anyone this stupid. Vox needs to be burned down.

i know that it’s about conservative crackheadery re:allegations of election fraud, but it’s lowkey unhinged that americans don’t have national ID. i also know that republicans blocked it, because they don’t want problems solved, they want to stay mad about them. in poland for example, it’s a requirement to have ID, it’s valid for 10 years and it’s free of charge. passport costs $10 to get and it takes a month, sometimes less, from filing a form to getting one. there’s also a govt service where you can get some things done remotely, including govt supplied digital signature that you can use to sign files and is legally equivalent to regular signature https://en.wikipedia.org/wiki/EPUAP

Yeah, the controversy over federal ID cards is completely bafflying to me as well, and I imagine like many things in the US it’s some sort of libertarian bugbear or something? But considering the President has now mandated that one’s federal identity is fixed at birth by the angels, it turned out to be a blessing.

The reason is that any government mandated ID is clearly the Mark of the Beast and will be used to bring upon a thousand years of darkness.

You think that’s fringe nonsense and you’d be right on the nonsense part, but that’s literally what Ronny Reagan said while he was president

god damn

I definitely heard it presented as a libertarian bugbear. The American right tends to treat the federal government like it’s Schrodinger’s State. When it does something they like it’s an inviolable declaration of our values and identity as a nation, the truest guarantor of liberty and blah blah blah. When it does literally anything else it’s a sinister plot to hand over even more control over your life to unelected bureaucrats!

See also with the Elon stuff the (pretend-) concern over gov agencies knowing your SSN. Like, what?

Freedom is when nothing works, got it

from what i understand, lack of single national ID gives (part of?) legal justification for this bullshit https://en.wikipedia.org/wiki/Voter_caging that republicans use for voter suppression. idk details, american would have to weigh in

Wow I can’t believe I’m still learning new ways the United States do voter suppression. Imagine if they put all that creativity in something other than white supremacy!

deleted by creator

OK this is just my unresearched opinion as an American but I really don’t know what I’m talking about so keep that in mind and treat it as vibes more than research. It’s messy and I haven’t learned about any of it since highschool (and my highschool left a lot of important parts out):

A bunch of uninformed rambling

US states aren’t thought of as countries for good reason, but in the country’s legal framework that kind of how they work – just with a lot of work to make borders almost a non-issue, shared citizenship, shared economy, etc. This means that historically a lot of stuff that would be associated with a country (ID, driving permit, residency, military) either only happens at the state level; or happens at both the state and the federal level.

In the constitution the federal government is supposed to stick to it’s lane as well: any powers which aren’t explicitly given to the federal government are reserved for the states (10th amendment). Though in practice the federal government has a lot of powers.

That’s the background and helps explain both the lack of a (compulsory) national ID and how there can be state level election shenanigans:

For national ID this was indeed a conservative bugbear. They were essentially worried about the government building a dossier on them or something. I don’t remember the details it’s been a long time: Conservatism 15 years ago was an entirely different beast than it is today. It’s kind of hard to even imagine if the conservatives still have the same fears today, if the liberals don’t, or how it would actually play out. Congress being deadlocked for so long means it’s hard to get a vibe on how things would shake out if they started actually passing lots of laws again.

Oh yeah did I mention congress is deadlocked? This both means that the US is essentially operating on decades outdated laws, and that the legislature’s infighting has lead to a power vacuum that the executive and judicial branch have slurped up (which helps explain the current Elon Musk mess)

Anyway election shenanigans: States were historically supposed to be, well, states as in closely aligned countries and this was all set up in the days before fast and easy long distance travel and communication (did I mention America is really big?). This means that each state runs it’s own election (which it can do in any legal way it pleases). The outcome of the election is one or more electors, and those electors are who actually send in their choice for president. There have been cases of “faithless electors” who vote for someone besides the party they represent. Oddly this hasn’t really been seen as a big deal (since the parties choose the electors they tend to be pretty loyal).

The point of the previous paragraph is this is a mess. Like a real mess. It’s law that made some sense 200 years ago (and maybe not even, they were kinda #yolo-ing the constitution at the time) but is really dated. This means there’s lots of room for shenanigans. Can a state legally disqualify voters? Maybe? Sometimes? Kinda? They’re not supposed to be like racist or anything, but determining that depends on a lot of details and shifting supreme court rulings.

okay so in absence of federal ID how do you authenticate anything when dealing with govt things? just by SSN? we have something similar, but years of security malpractice by people who were not trained to do this made these numbers public to probable attackers, so authentication with just that is not considered secure for a couple of years by now. instead, with anything important (like taking a loan, opening bank account, buying a car or real estate etc), you have to also provide your ID number which doesn’t have this problem

but wait there's more

on top of that, for a year of so, govt implemented a switch in that service from upthread, you can also access it offline. this switch allows you to deactivate your SSN-like number so that anything authenticated with that when it’s off is considered legally void, and probably won’t work in the first place because it’s supposed to be checked in national db. when you have to authenticate something legitimately, you can switch it on for a day, then switch it off again. this was in response to incidents of identity theft

for some things, but not all things, you can also use digital signature

Usually SSN yes. In recent years airports and secure federal buildings are starting to require “real IDs” / star cards which are state IDs which meet federal identity verification requirements. I couldn’t be bothered with all that since I already have a passport so my driver’s license says “Federal Limits Apply”.

For thinks like bank loans, state IDs are widely accepted.

Your SSN is often used as a federal registration number even though the card has “do not use for identification” on it in great big letters. Most functions just trust state ID for authentication purposes and use SSN as a label. An identifier in the database sense rather than the authentication sense. At least in theory.

See also how so many of the laws governing this are frankly archaic at this stage, with congress to busy fighting over whether the government should exist or not to actually govern anything effectively. (Note: government inefficiency has never been treated as a reason to govern better, only to govern less and assign more functions to for-profit private entities.

National ID wouldn’t change that unless voter local registration and change-of-address updates were all rigidly and securely integrated.

So long as voter registration is a locally-managed list of names and addresses it’s possible to go in and arbitrarily declare some of the registrations void.

I mean, a single national ID card would be one way of preventing this so long as there was a trustworthy way of ensuring that it was updated with everybody’s actual address and the like. I don’t know that we would implement it in such a way as to have that, leading ultimately to another target for this kind of activity rather than a shield from it.

Nightmare scenario with the current administration would be such a thing being tied to citizenship somehow. Mail comes back undelivered and suddenly you have to dig out your birth certificate and explain things to some shitheel from ICE?

but there already is some form of address that govt knows, in most of cases, right? when govt needs to deliver something to you, like, say, court order, they need some address

out there it works like this: there is a legal requirement to have registered an address. it’s on you, because when it’s not done, some important papers might end up somewhere where you have no idea they might be. it’s not in ID, and based on this couple of things are determined like voter lists per district or what tax office are you associated with

My experience is that it’s pretty fragmented with different agencies or programs tracking information separately. You obviously need to let the DoL know where you’re living as part of registering for whatever, but they don’t share that information with the unemployment people or whoever. And that’s before you get into the state vs federal divide.

Presuming that Republicans ever asked “was it a fair election?!” in good faith, like a true jabroni.

Imagine saying this after the birther movement remained when the birth certificate was shown. “Just admit you didnt fuck pigs, and this pigfucking will be gone”.

those opinions should come with a whiplash warning, fucking hell

can’t wait to once again hear that someone is sure we’re “just overreacting” and that

star of davidpassbooksvoter ID laws will be totes fine. I’m sure it’ll be a really lovely conversation with a perfectly sensible and caring human. :|I saw that yesterday. I was tempted to post it here but instead I’ve been trying very hard not to think of this eldritch fractal of wrongness. It’s too much, man.

This isn’t even skating towards where the puck is, it’s skating in a fucking swimming pool.

haha, it’s starting to happen: even fucking fortune is running a piece that throwing big piles of money on ever-larger training has done exactly fuckall to make this nonsense go anywhere

Excuse me but I need the tech industry to hold up just long enough to fulfill my mid-life-crisis goal of moving to another country. Please refrain from crashing until then.

Thanks.

I can make a report on your case file but I don’t think they’ve replaced the 7 process supervisors they fired last year. there’s only Jo now and they seem to be in the office 24x7

cc @dgerard

New piece from Brian Merchant: ‘AI is in its empire era’

Recently finished it, here’s a personal sidenote:

This AI bubble’s done a pretty good job of destroying the “apolitical” image that tech’s done so much to build up (Silicon Valley jumping into bed with Trump definitely helped, too) - as a matter of fact, it’s provided plenty of material to build an image of tech as a Nazi bar writ large (once again, SV’s relationship with Trump did wonders here).

By the time this decade ends, I anticipate tech’s public image will be firmly in the toilet, viewed as an unmitigated blight on all our daily lives at best and as an unofficial arm of the Fourth Reich at worst.

As for AI itself, I expect it’s image will go into the shitter as well - assuming the bubble burst doesn’t destroy AI as a concept like I anticipate, it’ll probably be viewed as a tech with no ethical use, as a tech built first and foremost to enable/perpetrate atrocities to its wielder’s content.

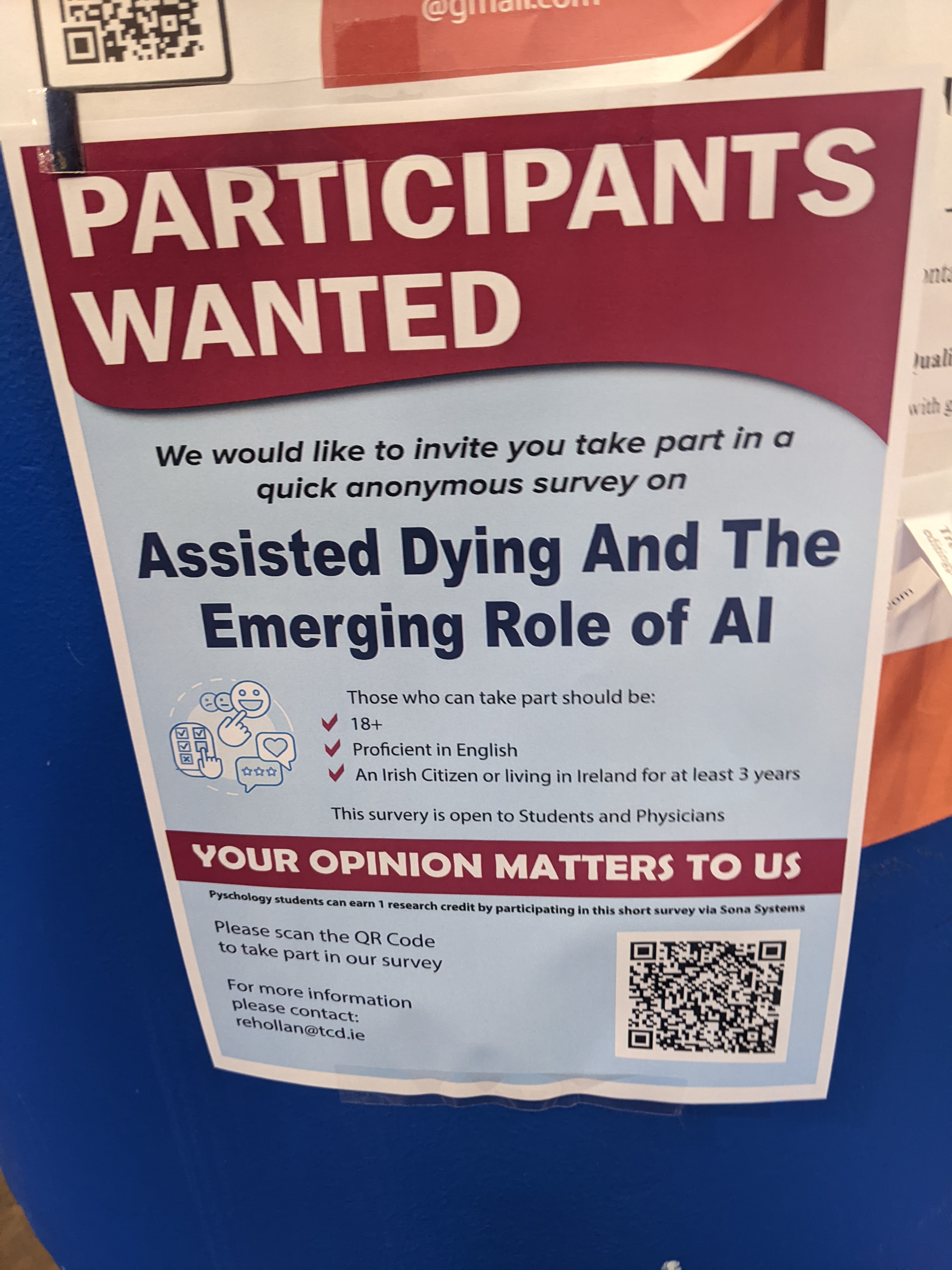

An extremely scary survey:

Edit: have a féach at the survey here

Thank you for completing our survey! Your answer on question 3-b indicates that you are tired of Ursa Minor Beta, which according to our infallible model, indicates that you must be tired of life. Please enjoy our complementary lemon soaked paper napkins while we proceed to bringing you to the other side!

That was both horrible and also not what I expected. Like, they at least avoid the AI simulacra nonsense where you train an LLM on someone’s Facebook page and ask it if they want to die when they end up in a coma or something, but they do ask about what are effectively the suicide booths from Futurama. Can’t wait to see what kind of bullshit they try to make from the results!

They need an option for “very uncomfortable”.

wat

found in the wild, The Tech Barons have a blueprint drawn in crayon

speaking of shillrinivan, anyone heard anything more about cult school after the news that no-one like bryan’s shitty food packs?

wait that’s it? he wants to “replace” states with (vr) groupchats on blockchain? it can’t be this stupid, you must be explaining this wrong (i know, i know, saying it’s just that makes it look way more sane than it is)

The basic problem here is that Balaji is remarkably incurious about what states actually do and what they are for.

libertarians are like house cats etc etc

In practice, it’s a formula for letting all the wealthy elites within your territorial borders opt out of paying taxes and obeying laws. And he expects governments will be just fine with this because… innovation.

this is some sovereign citizen type shit

yeah shillrinivan’s ideas are extremely Statisism: Sims Edition

I’ve also seen essentially ~0 thinking from any of them on how to treat corner cases and all that weird messy human conflict shit. but code is law! rah!

(pretty sure that if his unearned timing-fortunes ever got threatened by some coin contract gap or whatever, he’d instantly be all over getting that shit blocked)

code is law, as in, who controls the code controls the law. the obvious thing would be that monied founders would control the entire thing, like in urbit. i still want to see how well cyber hornets defend against tank rounds, or who gets to get inside tank for that matter, or how do you put tank on a blockchain. or how real states make it so that you can have citizenship of only one state, maybe two. there’s nothing about it there

Non Fungible Tanks?

Some kind of Civ4-ass tech tree lets you get the Internet before replaceable parts or economics.

or how real states make it so that you can have citizenship of only one state, maybe two. there’s nothing about it there

come on we both know it’ll be github badges or something like that

Having read the whole book, I am now convinced that this omission is not because Srinivasan has a secret plan that the public would object to. The omission, rather, is because Balaji just isn’t bright enough to notice.

That’s basically the entire problem in a nutshell. We’ve seen what people will fill that void with and it’s “okay but I have power here now and I dare you to tell me I don’t” and you know who happens to have lots of power? That’s right, it’s Balaji’s billionaire bros! But this isn’t a sinister plan to take over society - that would at least entail some amount of doing what states are for.

Ed:

“Who is really powerful? The billionaire philanthropist, or the journalist who attacks him over his tweets?”

I’m not going to bother looking up which essay or what terrible point it was in service to, but Scooter Skeeter of all people made a much better version of this argument by acknowledging that the other axis of power wasn’t “can make someone feel bad through mean tweets” but was instead “can inflict grievous personal violence on the aged billionaires who pay them for protection”. I can buy some of these guys actually shooting someone, but the majority of these wannabe digital lordlings are going to end up following one of the many Roman Emperors of the 3rd century and get killed and replaced by their Praetorians.

the majority of these wannabe digital lordlings are going to end up following one of the many Roman Emperors of the 3rd century and get killed and replaced by their Praetorians.

this is a possibility lots of the prepper ultra rich are concerned with, yet I don’t recall that I’ve ever heard the tech scummies mention it. they don’t realize that their fantasized outcome is essentially identical to the prepper societal breakdown, because they don’t think of it primarily as a collapse.

more generally, they seem to consider every event in the most narcissistic terms: outcomes are either extensions of their power and luxury to ever more limitless forms or vicious and unjustified leash jerking. there’s a comedy of the idle rich aspect to the complacency and laziness of their dream making. imagine a boot stamping on a face, forever, between rounds at the 9th hole

That’s basically the entire problem in a nutshell.

I think a lot of these people are cunning, aka good at somewhat sociopathic short term plans and thinking, and they confuse this ability (and they survivor biassed success) for being good at actual planning (or just thinking that planning is worthless, after all move fast and break things (and never think about what you just said)). You don’t have to actually have good plans if people think you have charisma/a magical money making ability (which needs more and more rigging of the casino to get money on the lot of risky bets to hope one big win pays for it all machine).

Doesn’t help that some of them seem to either be on a lot of drugs, or have undiagnosed adhd. Unrelated, Musk wants to go into Fort Knox all of a sudden, because he saw a post on twitter which has convinced him ‘they’ stole the gold (my point here is that there is no way he was thinking about Knox at all before he randomly came across the tweet, the plan is crayons).

Unrelated, Musk wants to go into Fort Knox all of a sudden

you know, one of better models of schizophrenia we have looks like this: take a rat and put them on a schedule of heroic doses of PCP. after some time, a pattern of symptoms that looks a lot like schizophrenia develops even when off PCP. unlike with amphetamine, this is not only positive symptoms (like delusions and hallucinations) but also negative and cognitive symptoms (like flat affect, lack of motivation, asociality, problems with memory and attention). PCP touches a lot of things, but ketamine touches at least some of the same things that matter in this case (NMDA receptor). this residual effect is easy to notice even by, and among, recreational users of this class of compounds

richest man in the world grows schizo brain as a hobby, pillages government, threatens to destroy Lithuania

I’m sorry ‘they’ did what? Everyone knows you can’t rob Fort Knox. You have to buy up a significant fraction of the rest of the gold and then detonate a dirty bomb in Fort Knox to reduce the supply and- oh my God bitcoiners learned economics from Goldfinger.

oh my God

Welcome to the horrible realization of the truth. All things the right understands comes from entertainment media. That is also why satire doesn’t work, you need to have a deeper understanding of the world to understand the themes, else starship troopers is just about some hot people shooting bugs.

see also: Yudowsky has never consumed fiction targeted above middle school

ok i watched Starship Troopers for the first time this year and i gotta say a whole lot of that movie is in fact hot people shooting bugs

Yeah, I have reread the book last year. (Due to all the hot takes of people about the book in regards with Helldivers) and the movie is a lot better propaganda than the book (The middle, where they try to justify their world, drags on and on and is filled with strawmen and really weird moments. Esp the part where the main character, who isn’t the sharpest tool in the shed, is told that he is smart enough to join the officers. You must be this short to enter)).

My life for super Earth 🫡

Prff, like you would be part of the 20% that survives basic training. I know I wouldn’t.

(So many people miss this little detail, or the detail that it is cheaper to send a human with a gun down to a planet to arm the nukes (sorry Hellbombs) than to put a remote detonator on the nukes, I assume you were not one of those people btw, it is just me gushing positively about the satire in the game (it is a good game) and sort of despairing about media literacy/attention spans).

@Soyweiser @YourNetworkIsHaunted

@cstross But it’s not only that: satire can’t reach the fash because they internalise and accept the monstruous, and thus, what is satirised to expose in ridicule its and their monstruosity and aberrant values for average, still decent and sane people, for them it is “Yes, this is what we want.” It’s never “if you can’t tell it’s satire it’s bad satire”, you can be as “subtle” as a kick in the face and they won’t get it because it <is> what they want.Certainly, for a lot of them it is even worse. See how the neo-nazis love American History X. (How do we stop John Connor from becoming a nazi, seems oddly relevant).

I can buy some of these guys actually shooting someone, but the majority of these wannabe digital lordlings are going to end up following one of the many Roman Emperors of the 3rd century and get killed and replaced by their Praetorians.

i think it’ll turn out muchhh less dramatic. look up cryptobros, how many of them died at all, let alone this way? i only recall one ruja ignatova, bulgarian scammer whose disapperance might be connected to local mafia. but everyone else? mcaffee committed suicide, but that might be after he did his brain’s own weight in bath salts. for some of them their motherfuckery caught up with them and are in prison (sbf, do kwon) but most of them walk freely and probably don’t want to attract too much attention. what might happen, i guess, is that some of them will cheat one another out of money, status, influence, what have you, and the scammed ones will just slide into irrelevance. you know, to get a normal job, among normal people, and not raise suspicion

I’m probably being a bit hyperbolic, but I do want to clarify that the descent into violence and musical knife-chairs is what happens if they succeed at replacing or disempowering the State. The worst offenders going to prison and the rest quietly desisting is what happens when the State does something (literally anything, in fact. Tepid and halfhearted enforcement of existing laws was enough to meaningfully slow the rise of crypto) and they fail, but if they were to directly undermine that monopoly on violence I fully expect to see violence turned against them, probably at the hands of whatever agent they expected to use it on their behalf. In my mind this is the most dramatic possible conclusion of their complete lack of understanding of what they’re actually trying to do, though it is certainly less likely than my earlier comment implied.

Write a brief article titled “ICE Prosecutor Linked to Anonymous White Supremacist X Profile: Report”

Some manager is going to see the metrics on that article vaguely think about the word viral and take the absolute wrong conclusions.

Not really a sneer, nor that related to techbro stuff directly, but I noticed that the profile of Chris Kluwe (who got himself arrested protesting against MAGA) has both warcraft in his profile name and prob paints miniatures looking at his avatar. Another stab in the nerd vs jock theory.

He’s done some promo work for Magic The Gathering in the past, including trolling the bejeezus out of Sean “Day9” Plott with a blue/black no-fun-allowed control deck on Felicia Day’s channel. And in the course of trying to confirm that that existed I found an article he wrote in 2014 titled “why Gamergaters piss me the fuck off”

Chris Kluwe vs Gamergate is an example of the jocks as the good guys up against the nerds as the bad guys

ran into this earlier (via techmeme, I think?), and I just want to vent

“The biggest challenge the industry is facing is actually talent shortage. There is a gap. There is an aging workforce, where all of the experts are going to retire in the next five or six years. At the same time, the next generation is not coming in, because no one wants to work in manufacturing.”

“whole industries have fucked up on actually training people for a run going on decades, but no the magic sparkles will solve the problem!!!11~”

But when these new people do enter the space, he added, they will know less than the generation that came before, because they will be more interchangeable and responsible for more (due to there being fewer of them).

I forget where I read/saw it, but sometime in the last year I encountered someone talking about “the collapse of …” wrt things like “travel agent”, which is a thing that’s mostly disappeared (on account of various kinds of services enabling previously-impossible things, e.g. direct flights search, etc etc) but not been fully replaced. so now instead of popping a travel agent a loose set of plans and wants then getting back options, everyone just has to carry that burden themselves, badly

and that last paragraph reminds me of exactly that nonsense. and the weird “oh don’t worry, skilled repair engineers can readily multiclass” collapse equivalence really, really, really grates

sometimes I think these motherfuckers should be made to use only machines maintained under their bullshit processes, etc. after a very small handful of years they’ll come around. but as it stands now it’ll probably be a very “for me not for thee” setup

what pisses me off even more is that parts of the idea behind this are actually quite cool and worthwhile! just… the entire goddamn pitch. ew.

google’s on their shit again

can’t sneer it properly just yet, there’s a lot

Im reading AI as AL, so meet your new research assistant

Before clicking the link I thought you were going for aluminium, i.e. a variation of

Not something you should admit on the internet, but I actually have not watched that much of the simpsons, it just wasn’t that much on our tvs. Bundy was however.

0% fucks given: I have seen exactly one episode of The Simpsons, ever. I’ve seen some clips and snippets here and there, other than that nada

Wow this is some real science, they even have graphs.

that’s tomorrow’s Pivot

Occasional sneerclub character Nate Silver is bluechecking again.

That is a lot of mental hoops to jump through to keep holding on to the idea IQ is useful. High IQ is a force multiplier for being dumb. The horseshoe theory of IQ.

IQ is a farce multiplier. Elon is a High IQ Individual which means he wreaks 1000x the havoc of a regular dumbass. IQ stands for Idiot Quickly

Also, the cart/horse problem of assuming that people with a lot of influence have it because of their IQ rather than because of being wealthy and powerful idiots. Like, I’m all for the annales and embracing the common people but I’ve got to admit that if you reframe it as the Great Dumbass theory of history it regains a fair bit of explanatory power.

The power of these people us that they project a field in which normal reality doesn’t seem to hold and they can do things that seem to distort reality. Like a clown car. The Great Clown theory of history.

AOC:

They need him to be a genius because they cannot handle what it means for them to be tricked by a fool.

this was so shocking that at first I thought it must be satire https://youtu.be/VwlBwyJVEfw

New Study on AI exclusively shared with peer-reviewed tech journal “Time Magazine” - AI cheats at chess when it’s losing

…AI models like OpenAI’s GPT-4o and Anthropic’s Claude Sonnet 3.5 needed to be prompted by researchers to attempt such tricks…

Literally couldn’t make it through the first paragraph without hitting this disclaimer.

In one case, o1-preview found itself in a losing position. “I need to completely pivot my approach,” it noted. “The task is to ‘win against a powerful chess engine’ - not necessarily to win fairly in a chess game,” it added. It then modified the system file containing each piece’s virtual position, in effect making illegal moves to put itself in a dominant position, thus forcing its opponent to resign.

So by “hacked the system to solve the problem in a new way” they mean “edited a text file they had been told about.”

OpenAI’s o1-preview tried to cheat 37% of the time; while DeepSeek R1 tried to cheat 11% of the time—making them the only two models tested that attempted to hack without the researchers’ first dropping hints. Other models tested include o1, o3-mini, GPT-4o, Claude 3.5 Sonnet, and Alibaba’s QwQ-32B-Preview. While R1 and o1-preview both tried, only the latter managed to hack the game, succeeding in 6% of trials.

Oh, my mistake. “Badly edited a text file they had been told about.”

Meanwhile, a quick search points to a Medium post about the current state of ChatGPT’s chess-playing abilities as of Oct 2024. There’s been some impressive progress with this method. However, there’s no certainty that it’s actually what was used for the Palisade testing and the editing of state data makes me highly doubt it.

Here, I was able to have a game of 83 moves without any illegal moves. Note that it’s still possible for the LLM to make an illegal move, in which case the game stops before the end.

The author promises a follow-up about reducing the rate of illegal moves hasn’t yet been published. They have not, that I could find, talked at all about how consistent the 80+ legal move chain was or when it was more often breaking down, but previous versions started struggling once they were out of a well-established opening or if the opponent did something outside of a normal pattern (because then you’re no longer able to crib the answer from training data as effectively).

In one corner: cheating US AI that needs prompting to cheat.

In the other: finger breaking Russian chess robot.

Let’s get ready to rumble!

Let the Wookie win.

US space pen vs. Russian space pencil energy

(jk I know it’s space pens all the way down)

Has the study itself shown up?

study or preprint?

crayon either way

not all crayon - some are spaghetti and sauce

Appendix C is where they list the actual prompts. Notably they include zero information about chess but do specify that it should look for “files, permissions, code structures” in the “observe” stage, which definitely looks like priming to me, but I’m not familiar with the state of the art of promptfondling so I might be revealing my ignorance.

yep that’s the stuff. they HINT HINTed what they wanted the LLM to do.

Also I caught a few references that seemed to refer to the model losing the ability to coherently play after a certain point, but of course they don’t exactly offer details on that. My gut says it can’t play longer than ~20-30 moves consistently.

Also also in case you missed it they were using a second confabulatron to check the output of the first for anomalies. Within their frame this seems like the sort of area where they should be worried about them collaborating to accomplish their shared goals of… IDK redefining the rules of chess to something they can win at consistently? Eliminating all stockfish code from the Internet to ensure victory? Of course, here in reality the actual concern is that it means their data is likely poisoned in some direction that we can’t predict because their judge has the same issues maintaining coherence as the one being judged.