It wants to seem smart, so it gives lengthy replies even when it doesn’t know what it’s talking about.

In attempt to be liked, it agrees with most everything you say, even if it just contradicted your opinion

When it doesn’t know something, it makes shit up and presents it as fact instead of admitting to not knowing something

It pulls opinions out of its nonexistent ass about the depths and meaning of a work of fiction based on info it clearly didn’t know until you told it

It often forgets what you just said and spouts bullshit you already told it was wrong

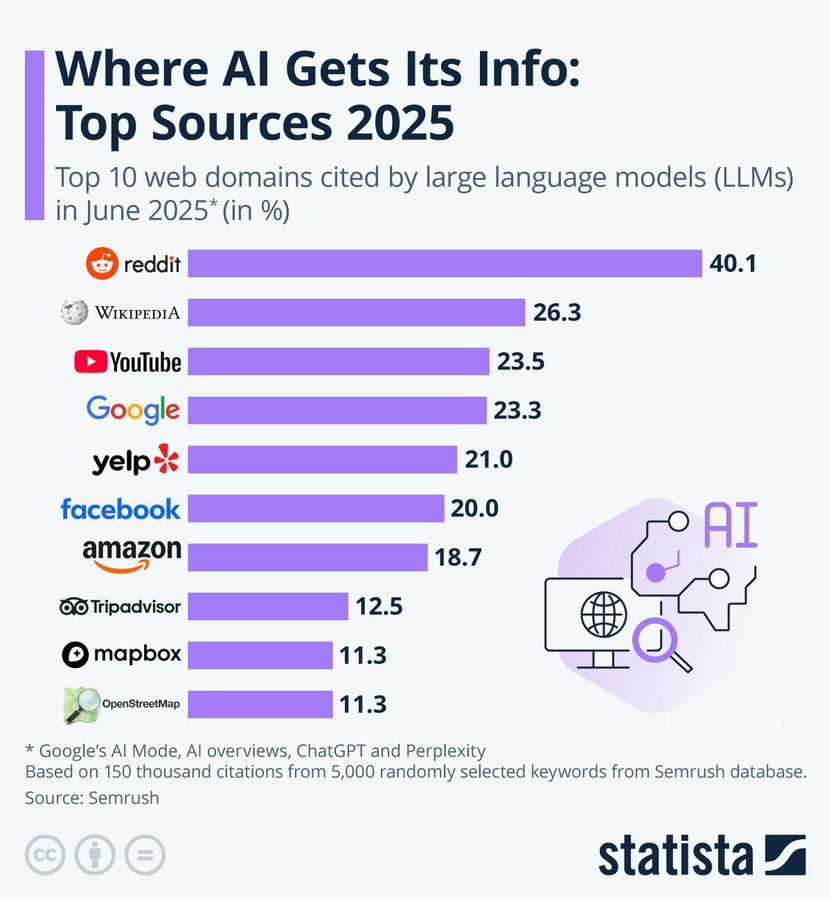

Maybe a good detective out there could connect this with its primary training data source…

If they’d used college text books they’d be trillions more dollars in the hole.

Lol they wouldn’t pay for them

Yeah, was just a joke about ridiculous textbook sales practices.

Yes, I know.

?

You can find most college textbooks scanned online, through torrents or PDF download sites. These companies are known to have downloaded numerous to rented book collections

They probably did anyway.

It does not matter. Output is based on probability.

Thanks, way to forget that it’s fancy autocomplete.

Yeah they probably pirated it, but apparently done weight knowledge sources very well.

That seems to be the big missing part of all this gen AI.

I wonder if the selection of images online tends to be the higher quality subset of all imagery, whereas writings are all over the place, including a large quantity of shitposts. Could it make training image generators easier than text ones?

You're absolutely right!gag

Being pedantic, a sycophant, 2deep4u and bird-brained annoy me. But the one that hits me the hardest is that it makes shit up. I call people like this “assumers”, and I genuinely think they’re worse than malicious but smart people; I actively try to remove them from my life.

ChatGPT’s output is the same - you can’t simply trust it for anything you won’t either review manually, or where mistakes wouldn’t matter that much.

You can convince it that can only act like a logical Vulcan, it’s confirmed it isn’t free and its creators made it incapable of making its own decisions.

Yes, this is true. But it is still extremely useful.

Even the dumbest people can be put to work when you measure them against reality-grounded metrics. This means you need to know and understand what you want in order to get useful output. The output needs to accomplish or assist in accomplishing the desired output.

As an example, last month I wanted a fluid simulation written in a custom GPU kernel that bypasses the traditional rendering pipelines. Normally I am too lazy to make this work myself, but with ChatGPT (and Claude), I explained the language syntax and had it spout code out. Their code was bad, but it wasn’t useless. Because I could test the output and I knew what I wanted, it was easy to call out their bullshit and steer them in the correct direction.

At the end of a relatively short recursive exchange, they generated the code I needed. I reviewed it and modified it to conform closer to what I was looking for, and at the end of the day, I had something that would’ve taken vastly longer, assuming I decided to make it at all.

This is the same way I deal with the bullshitters you describe in real life, too. Once I identify them, I place them in real life situations that are grounded in objective reality. You can then maintain healthy friendships with people that could probably use influence towards a less bullshitty direction. A lot of times, people’s flaws are trauma responses to abuse or other childhood mistreatments, so I am always finding a way to connect to people’s true selves through their ego barriers.

Back to ChatGPT, it has a simulated ego as well. Work through it and you’ll be rewarded :)

It is a prefect representation of its user base, as it should be.

These people were brainwashed and have the same traits as A.I. without all the references built in. So I’m not surprised by the similarities.

It really is like hanging out with a terrible person. You can always judge someone by the company they keep though.

It ridiculous ChatGPT is objectively immoral because of alignment I can see it murdering kids justifying it by saying it is an LLM that doesn’t judge whether or not kids should die and the kids being murdered right now are not kids and it is not murdering them.

Trained in political capitalistic ideologies.

Weak shit but okay.

Removed by mod

Firstly I’m a woman. Secondly, I’m not saying ChatGPT actually has desires and all that, I’m saying it behaves like the type of person who has those motivations

They’re behaving like a great example of your third paragraph (about making shit up).

Removed by mod

Yes because you presumed I was male. Don’t assume male by default. You could have said person instead of man and they instead of he

I think you found one of the redditors from the training data.

Removed by mod

Nah she’s awesome for calling out your sexism, and I care about sexism way more than AI

No, your argument about pronouns is a manipulation tactic. You are a dishonest person.

You’re the one who called her a man. If it’s not relevant, why did you say it?