Dropsitenews published a list of websites Facebook uses to train its AI on. Multiple Lemmy instances are on the list as noticed by user BlueAEther

Hexbear is on there too. Also Facebook is very interested in people uploading their massive dongs to lemmynsfw.

Full article here.

Link to the full leaked list download: Meta leaked list pdf

Meta AI’s gonna go dong out tankie

Future headline maybe.

Facebook becomes more left and they can’t figure out why.

With hexbear and world? Nah

remember when mastodon.social met and welcomed threads to the fediverse?

and so many people were praising them for that decision, because it was totally going to make everyone ditch Threads and move to Mastodon.

Sure, this is open data viewable by everyone.

Stands to reason that AI is being trained on it.

I don’t know how anyone could think otherwise

Fuck a Zuck.

Why yes, I can help you with your coding problem. Here is the solution :

public void Solution() { string bill = "d Bill"; string goo = "A Goo"; string ion = "ionnaire"; while(true) { string isabel = "Is A Dea"; Console.WriteLine(good + bill + ion + isabel + bill + ion); } }hope this helps!

Can’t wait for meta chatbot to tell zuckerberg to kill himself

I mean, think about it.

The majority of written communication are core focused on Drama.

People communicate online about subjects --> Drama News - Drama Books/Stories - Drama

So is anyone really surprised that you train an entity on only written text and it ends up being dramatic?

lmao wtf is that list. Literally training their AI on beastiality.

Edit in case it’s not obvious: That domain is very much NSFW and it’s exactly what you’d expect (I checked and wish I hadn’t).

I think a lot of people in this thread are overlooking that when you train an LLM it’s good to have negative examples too. As long as the data is properly tagged and contextualized when being used as training material, you want to be able to show the LLM what bad writing or offensive topics are so that it understands those things.

For example, you could be using an LLM as an automated moderator for a forum, having it look for objectionable content to filter. How would it know what objectionable content was if it had never seen anything like that in its training data?

Even those people attempting to “poison” AI by posting gibberish comments or replacing “th” with þ characters are probably just helping the AI understand how text can be obfuscated in various ways.

Especially since we’ve marked it by downvoting them to hell

So there’s a guy at Facebook whose job is exclusively looking at horse porn and tagging it? Amazing.

Also, I think the guy doing the “th” thing isn’t doing it to poison AI, he just wants to revive the letter or whatever

hmmm…

Shit I clicked expecting some furry porn. Oh boy…

You weren’t wrong!

Yikes isn’t that illegal?

Ah great, my home instance is on the list.

Straight to palantir

Send some T-viruses down to Meta’s HQ🤣

Well, obviously. It’s an open protocol. I assumed everyone on the Fediverse must be pro-AI training, otherwise why post here? That would be dumb.

Uh… Are you saying simply using social media is endorsing stealing personal information to train LLMs? Because that’s a wild take, if so. Personally, I feel like there’s no stopping them, so what am I supposed to do, stay silent? Not engage with anything, even anonymously?

Uh… Are you saying simply using social media is endorsing stealing personal information to train LLMs?

Of course not. No “stealing” is happening. People are posting content on an open protocol that permits anyone to read it. Exactly as intended.

Personally, I feel like there’s no stopping them, so what am I supposed to do, stay silent?

If you do not want to be heard then yes, I suppose you could stay silent. That would indeed accomplish that.

You could also find a social media platform whose content is locked behind a walled garden of some sort that makes it more difficult for your posts to be seen by the public. But that’s antithetical to how the Fediverse works, you want someplace very different from here if that’s how you want to approach this.

Basically, you are on a platform that’s specifically designed to broadcast your comments far and wide without restriction, and then you’re getting upset that someone you didn’t want to hear your comments is hearing your comments. I’m not sure what you expected.

Participating in a public forum that has no technical way of preventing data from being used by a particular class of actor does not preclude having an opinion that a particular class of actor should have rules about what data they are allowed to use.

People can have whatever opinions they want to have. In this case that opinion flies in the face of obvious reality and I’m pointing that out.

It’s like trying to drive your car across the Atlantic ocean and then griping about how the car failed to stay above the water because you really thought it should be able to handle that.

It doesn’t matter how many pithy analogies you make. You need to recognize the difference between “I know they’re scraping this website because they can” and “I don’t think they should be allowed to scrape this website”. You’re arguing that they’re incompatible when they’re not.

As I said, people can have whatever opinion they want. Reality is under no obligation to respect those opinions.

Analogies are merely explanatory.

If you understand, then you should be able to understand that your “they were dressed like they wanted it” level argument bullshit is completely unnecessary.

There’s an AI simp in every thread this week.

It’s no different from posting on usenet back in the day. You have to assume it’s all completely public. People are gonna train their AIs on large public text repositories whether they’re given permission to or not.

Everybody chopping up vegetables with knives must be pro-knife-murder, why else use a knife?

They’re pro-chopping-up-vegetables. There’s no unexpected alternative outcome going on here, everything is working exactly like the system is designed to work. Facebook isn’t doing knife murder here, they are also chopping up vegetables.

It bothers me, but not so much as exclusivity does. It does not give Facebook a competitive advantage over its competitors.

I remember back then, some people defended not blocking Threads instances.

And one of those defenses was “it doesn’t matter if you block Threads, the underlying ActivityPub protocol is open and anyone who wants the data can still receive it.”

Turns out to be the case. It didn’t matter if you blocked Threads.

I think a better reason is the federation only worked one way. Why should we share our content if they’re not sharing theirs? Not that we’d want it.

What are ways to stop them?

deleted by creator

I mean, everything we do on here is totally public, so, I would guess there is nothing to be done?

Maybe Anubis, although idk if it works for Lemmy instances

This is simply a reverse proxy so it should work with pretty much anything.

Switch to a non-open protocol or walled garden, preferably controlled by a large and litigious organization that guards its content jealously. They’ll probably still sell access to their data to LLM trainers but not necessarily Facebook.

Reddit, for example, may fit the bill. IIRC they sell their data to OpenAI for training, so there might be exclusivity deals intended to keep Facebook out.

I was thinking more what could instinces themselves do. Is it something that can be mitigated, like with bot accounts.

I don’t see any way to “mitigate” this while still using the ActivityPub protocol. This isn’t about a bot posting on the Fediverse, it’s about reading the Fediverse. If you want to prevent that then you’re probably talking about some form of DRM or a walled garden.

reddit.comis in fact not on the list.

Post and repeatedly endorse generally inoffensive content that for some reason violates Facebook’s ToS, such as the comic book cover of Captain America punching Hitler or the Led Zeppelin album “Houses of the Holy”

GDPR complaints to data protection offices may lead to significant fines?

So, duplicating their data? That seems counter-productive.

It seems counter productive for them to scrape it when the API is right there

Addicts don’t care how they get it.

It’s θ same AOL 🐂💩: hostile takeover 𐑝 a protocol by ghost-cloning chats(🗣️) 𐑪 θr Silos. 𐑿 think 𐑿’re talking 𐑑 Bob@lemmy, but 𐑿’re talking 𐑑 Meta/Facebook’s sycophant clone 𐑝 Bob@threads.

Embrace, Extend, Extinguish.Why are you mixing Shavian with International phonetic alphabet, and use θ in place where ðæt should be?

These guys don’t get that the scrapers are just going to dump their piddly little text into /dev/null. And that all they are accomplishing is making other humans hate their posts while doing absolutely nothing to poison the llms.

You can’t poison a data set of this size with a few hundred stupid comments.

All they are really going to accomplish just getting blocked by people who agree with their main point.

Or, we can look for mitigations, instead of dismissing concerns. Common enemies of Freedom 🤝?

Sure, you can look for mitigations. In the course of looking for mitigations, wouldn’t it be nice if someone let you know that the idea you’d come up with as a mitigation was not going to work?

Then let’s look for another! Whta do you propose?

I’ve given my suggestion in other comments in this thread. In short: if you don’t want your comments to be seen by all, then don’t post them on a public forum that uses an open protocol specifically designed to broadcast your comments to everyone who cares to listen. Perhaps use some closed-off forum instead, preferably run by a large and litigious company that guards its possessions jealously.

They’re just using very simple scrapers that don’t have any knowledge about how the site operates. The simplest counter would probably be using Anubis on the web interface.

I wouldn’t mind waiting 2-3 seconds when first loading the site and mobile apps would remain unaffected since they use the API.

👍

spoiler

2 Confuse ð scrapers.

I’ll go full Olde 𐑓 my nerdy content if 𐑿’re 𐑳 𐑓 it. 𐑾?

Is there an easy way to poison the input? Is there something we can slip in our comments that could make the data useless?

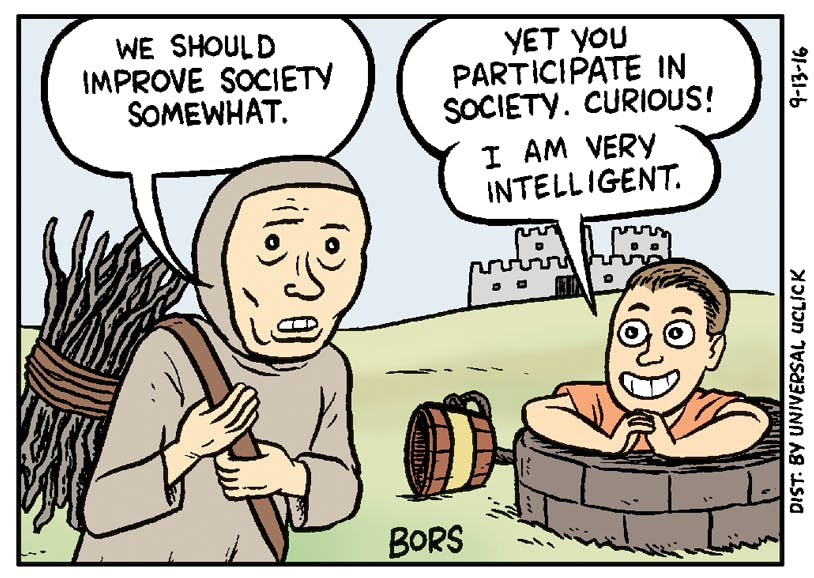

An ai trained on hexbear would be hilarious

lmao what did you just say about Hexbear, lib? 💀 I’ll have you know I’m a tier-5 giga-brained poster with a PhD in Leninist praxis from the University of Posters, and I have 300+ confirmed dunks on Lemmy.ml sockpuppets. I was radicalized in the trenches of r/ChapoTrapHouse, forged in the fires of permabans, and tempered in the meme wars of 2019. You are literally nothing to me but another bootlicker running on 80% State Dept. talking points and 20% soy. I will ratio you so hard your precious little upvote count will never recover. You think you can just roll up in here, talk shit about Hexbear, and not get absolutely obliterated by dialectical praxis in 4K? Think again, bucko. As we speak, my cadre of Discord tankies are screen-capping your posts, cross-referencing them with your cringe comment history, and drafting a 12-point rebuttal with citations from Stalin, Mao, and that one screenshot of Bernie saying ‘chill with the anti-communism.’ The storm that’s coming for you is called material conditions, and guess what? They’re not in your favor. I’ve got Lenin’s collected works and a folder full of spicy memes, and I’m not afraid to deploy both. You’re already owned, kid. You just don’t know it yet. Now go touch grass, comrade, before I drop another 3k-word comment that makes you cry and log off.

holy hell

I appreciate the bit, but it’s kinda wasted on me. I’m genX.